Agile AI Platform Architecture with the Agile Cloud Manager

Part 3 of 10: AI Models Break And Degrade Over Time

The entire transcript of this video is given below the video so that you can read and consume it at your own pace. We recommend that you both read and watch to make it easier to more completely grasp the material.

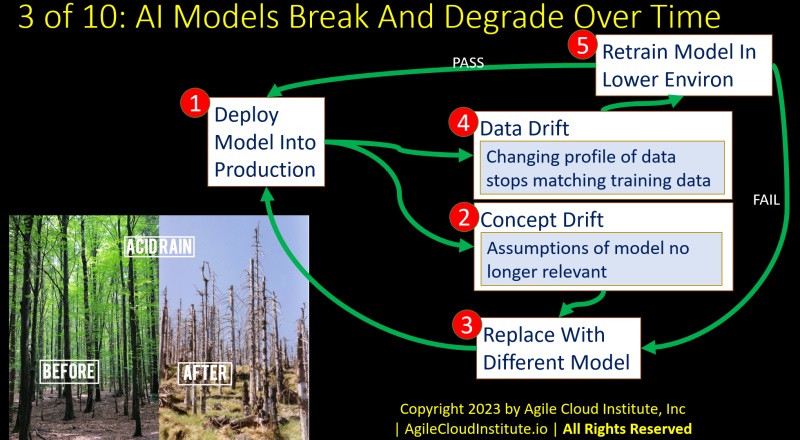

In this slide, we will examine how A.I. models break and degrade over time.

Let’s start by having a look at the two photos on the bottom left of the slide.

The BEFORE photo shows what a forest looks like in a healthy, normal state.

The AFTER photo shows how that same forest can look after acid rain destroys the ecosystem of the forest.

If you deployed an automated system to interact with the green forest that is shown in the BEFORE photo, then your automated system would become completely irrelevant after the same forest was destroyed as shown in the AFTER photo.

The context in which A.I. models operate can change just as drastically, and the changing context can cause A.I. models to break or degrade over time.

Number one on the slide illustrates a deployment of an A.I. model into production.

Number two on the slide illustrates concept drift. Concept drift is when the assumptions that led to the creation of the model are no longer relevant. Much like what you see in the photos at the bottom left of this slide, in which acid rain destroyed the ecosystem in a forest.

Number three on the slide shows that, when concept drift occurs, you have to replace the A.I. model with a new and different model that is better suited for the new and different context.

Number four on the slide describes data drift. Data drift is a term that describes what is happening when the profile of the data being ingested into the model in production stops matching the profile of the data that was used to train the model before the model was deployed into production.

Number five in the slide shows that, when data drift occurs, you need to retrain the A.I. model in a lower environment using new data that more closely mirrors the characteristics of production data. Ideally, you would use production data for training the models if your governance system allows you to use production data for training. But at minimum, you would need to generate data for training that closely mirrors the characteristics of production data.

- PASS: If the retrained model passes all of the automated tests, then you deploy the retrained model into production to resolve the data drift.

- FAIL: But if the retrained model fails the automated tests, then you determine that concept drift has occurred, and you replace the model with a different model.

Take a moment here to consider the level of automation that is required in order to manage a large number of A.I. models throughout an enterprise.

Remember that the preceding slide showed there are use cases for A.I. in every aspect of your organization.

So there might be hundreds of A.I. models to be managed in a medium-sized company. And there might be many thousands of A.I. models to be managed in a very large company.

Proceed to Part 4: Enterprise AI System Development Life Cycle

Back to Series Table Of Contents: Agile AI Platform Architecture With Agile Cloud Manager